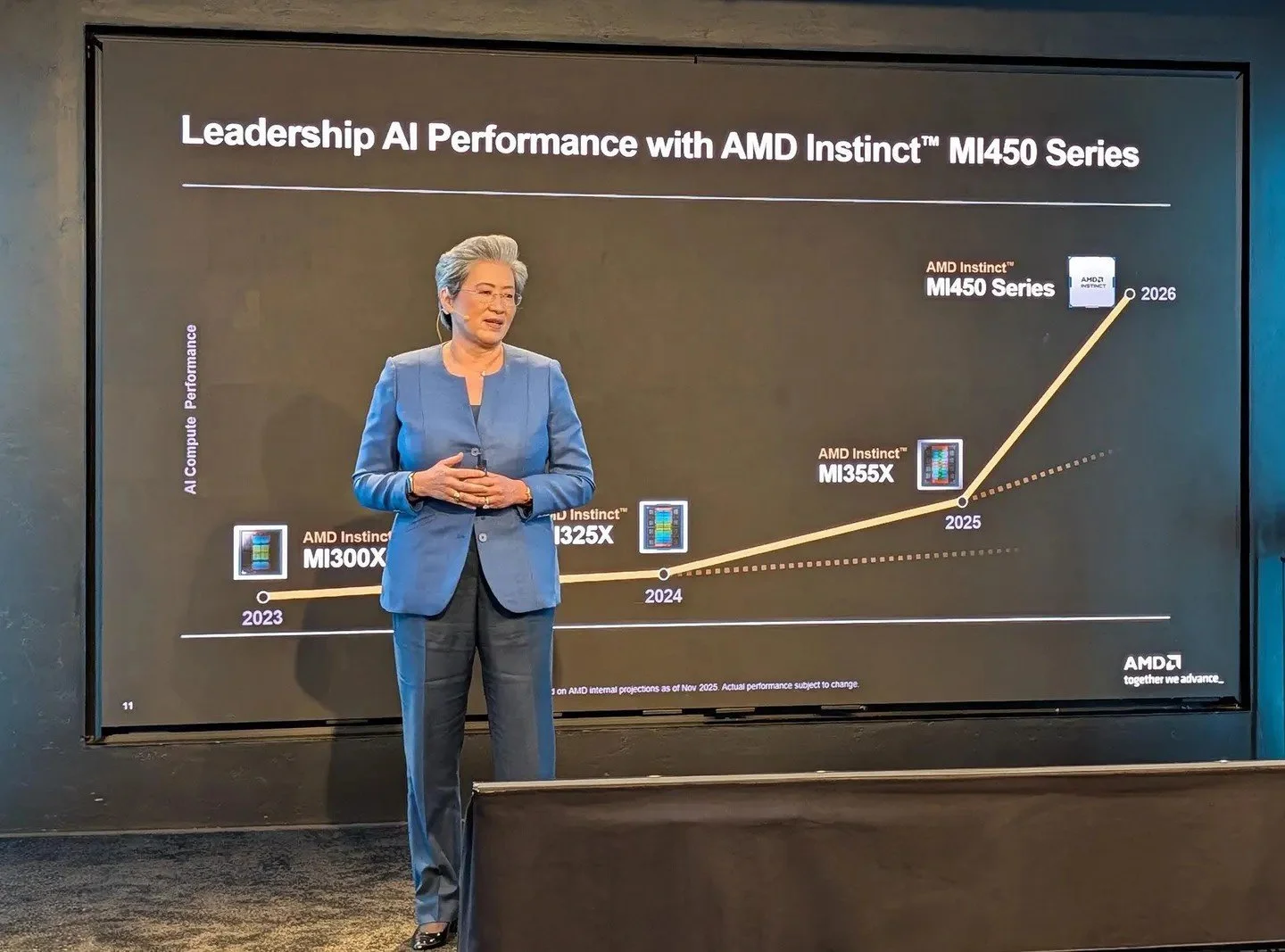

With a tee-up from its amazingly accomplished CEO Dr. Lisa Su, AMD execs outlined a long-term growth model that calls for more than 35% compound annual revenue growth at the company level and over a 60% CAGR in its profitable data center business over the next three to five years.

Read MoreCadence Design Systems made a major advancement with it system chiplet, that may further accelerate the semiconductor industry’s migration toward evolving chiplet-based architectures. The company detailed the successful silicon bring-up of its system chiplet architecture, which is the cornerstone of a broader chiplet ecosystem vision designed to push modular silicon platforms forward. I first wrote about Cadence’s system chiplet earlier this year…

Read MoreIn the drive to build more powerful AI platforms and denser compute clusters with fast interconnects, one of the unsung, foundational technologies required is also becoming one of the most critical—precision timing. Every AI server, optical module, and high-speed network link (among many other things) depends on accurate, precision timing signals to keep hundreds or thousands of processors synchronized. Without them, latency increases, data errors multiply, and efficiency drops. That’s where SiTime has carved out its niche.

Read MoreOver the last few days, the U.S. Department of Energy (DOE) announced a couple of strategic partnerships to build no less than four powerful AI supercomputers, spread across two national laboratories. AMD and Nvidia will be powering two major U.S. government-backed AI infrastructure projects—AMD with HPE for Sovereign AI Factory supercomputers and Nvidia with Oracle for the DOE’s largest AI system yet, though Oracle will also be involved with AMD’s project as well.

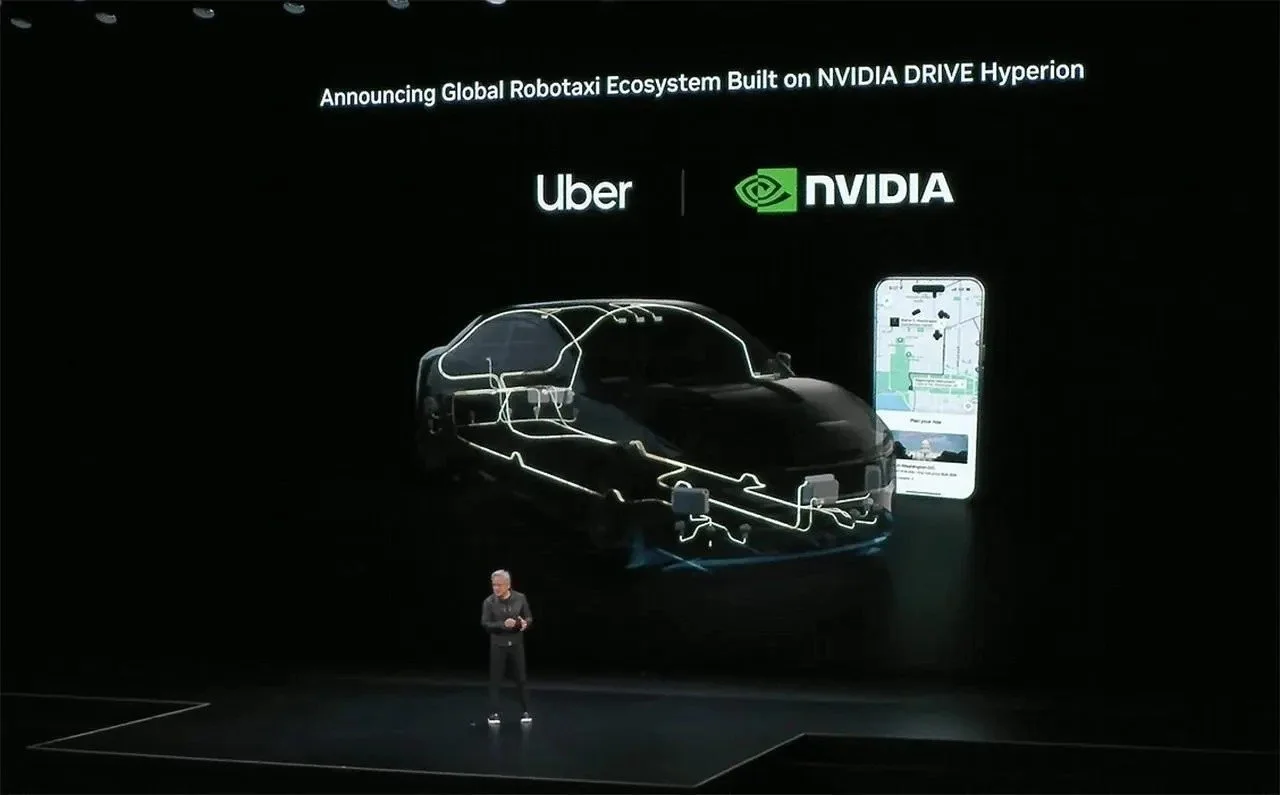

Read MoreNvidia and Uber further solidified a date the AV industry has been striving towards for years: large-scale robotaxis in regular service. The two companies plan to begin ramping a global autonomous fleet in 2027, growing toward 100,000 vehicles that will eventually roll directly onto Uber’s ride-hailing network. The backbone of the autonomous solution is Nvidia’s DRIVE AGX Hyperion 10 platform running the company’s DRIVE AV software stack, paired with a joint “AI data factory” leveraging Nvidia’s Cosmos development platform, that will train foundational AI models on “trillions” of real-world and synthetic driving miles.

Read MoreWith its new Maverick-2 accelerator, built on what the company calls an Intelligent Compute Architecture, NextSilicon is betting on a long-pursued but rarely realized approach to accelerating HPC and data center workloads, known as dataflow computing. A dataflow architecture is designed such that the data itself, not instruction sequences, drives computation. The company believes it’s finally solved the twin barriers that kept dataflow architectures confined to research labs: programmability and practicality.

Read MoreThe company unveiled two flagship processors, the Snapdragon 8 Elite Gen 5 for smartphones and the Snapdragon X2 Elite for PCs—that not only push performance and efficiency forward but will also hopefully serve as the foundation for a new class of AI-fueled personal agents.

Read MoreIn the world of high-tech electronics, timing is everything—literally. Long-standing precision timing solutions company, SiTime Corp, is aiming to reset the clock, so to speak, on how resonators are designed and deployed. The company’s newly announced Titan platform introduces a family of MEMS-based resonators that are markedly smaller, more resilient, and more easily integrated than traditional quartz designs.

Read MoreIn the semiconductor industry, virtually every major chip maker leverages physically accurate digital twins and simulation technologies throughout the design and manufacturing process, to gain invaluable insights into their devices, before a single wafer is prepped at the fab. When building chips, it is essentially a given that simulations and digital twins are used early and often, to ensure optimal performance, power, and area (PPA), but the same can’t be said in other industries. Even if we scale up only to the system level, for example, digital twins have been adopted by only a small fraction of companies. In this day and age of gigawatt AI factories and advanced data centers, however, it’s borderline silly to not leverage digital twins early in the design phase of complex projects.

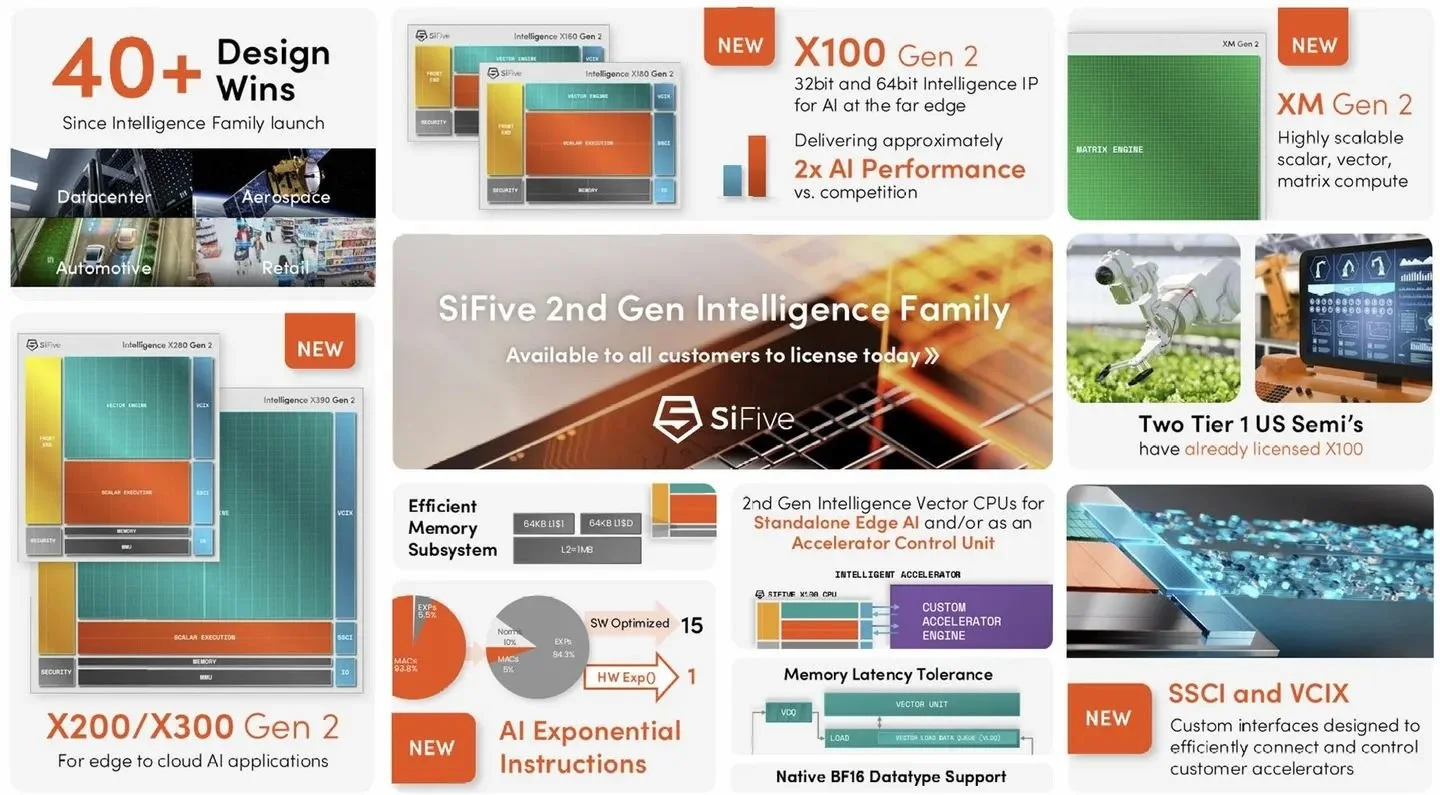

Read MoreSiFive just announced an array of new additions to its product stack that run the gamut, from tiny, ultra lower-power designs for far edge IoT devices, to more powerful engines for AI data centers, and everything in between. SiFive’s 2nd Generation Intelligence Family of RISC-V processor IP includes five new products -- the X160 Gen 2 and X180 Gen 2, both of which are brand-new designs, in addition to upgraded versions of the X280, X390, and XM cores.

Read MoreThe Google Pixel 10 Series arrives at a time when the smartphone market feels more than saturated, and even predictable. Yearly cycles bring faster processors, brighter displays, and incremental camera bumps. What stands out this year is not that the Pixel 10 Pro XL can match other flagships in hardware refinement, but that Google is repositioning its Pixel 10 series as a contextually aware assistant, with thoughtfully built, helpful AI at its core.

Read MoreNvidia’s Jetson line-up has long been the company’s proving ground for embedded AI computing at the edge, especially in robotics, industrial automation and autonomous vehicles. With the new Jetson AGX Thor developer kit, Nvidia is introducing a platform targeted at advancing machine learning in an arena where “physical AI”—robots, autonomous machines, and sensor-rich devices—must process vast amounts of data in real time.

Read MoreAMD Ryzen 9 9955HX3D Competitive Analysis: Gaming Performance With Fire Range HX3D Laptops

Read MoreIn a head-to-head battle of AI accelerators, the results are in — and Axelera AI didn’t just win, it ran laps around the competition.

Read MoreConsumers looking to upgrade from an older RTX xx50 GPU or legacy, mid-range GTX-class GPU, to a low-power modern graphics card may want to check out the GeForce RTX 5050.

Read MoreAMD’s latest achievement signals not only the company’s technical chops, but its commitment to driving more sustainable solutions in accelerated computing.

Read MoreWith the ZBook Ultra, HP may have hit a new sweet spot between traditional power users and the emerging generation of AI-driven developers and creators.

Read MoreIn their 40-year history, FPGAs have enabled a multitude of innovations, and even today they show no signs of stopping.

Read More